To recapitulate my earlier posts, gamma is a number that tells how quickly the luminance (L) produced by a television or computer monitor's screen rises as the video signal's analog voltage or digital code level (V) steadily ascends from the minimum possible value (black) to the maximum, which is in video parlance "reference white," assuming all three primaries, red, green, and blue, are equally represented in the signal.

Mathematically, the equation

L = VƔ

(Ɣ is the Greek letter gamma) represents the transfer function of the monitor, assuming its BRIGHTNESS or BLACK LEVEL control has been carefully adjusted so that it produces minimum L precisely for minimum V.

If its BRIGHTNESS or BLACK LEVEL control has been set either too low or too high, the equation becomes

L = (V + e)Ɣ

where e represents the amount of the black-level adjustment error. Failure to take e into account leads to an erroneous assumption that Ɣ itself has changed. If black level is set too low, Ɣ seems to rise, as the picture seems to gain contrast. If black level is set too high, Ɣ seems to go down, as the image contrast seemingly lessens.

Video cameras are standardized with an inverse transfer function which, a bit oversimplified, looks like

L = V(1/Ɣ)

where Ɣ is (approximately) the assumed gamma of the TV monitor. The exponent here is for the purpose of gamma correction. It serves to (almost) neutralize the gamma of the TV, for an end-to-end power of (close to) one.

By "end-to-end power" I mean what you get when you multiply the mathematical power or exponent in the second equation by that in the first. I parenthesized the words "approximately," "almost," and "close to" above because considerations of rendering intent or viewing rendering dictate that the end-to-end power actually ought to be somewhat greater than one.

As I said in my earlier post, one reason for this is that a video display's luminance levels are tiny fractions of the real-world luminances that arrive at the camera's image sensor from the original scene. Another is that we customarily frame video images in unnaturally dark surrounds and view them in darkened or semi-darkened rooms. A third is that our TV screens usually cannot achieve the ultra-wide contrast ratios found in nature.

These ideas about rendering intent and end-to-end power come from Charles Poynton's excellent textbook, Digital Video and HDTV: Algorithms and Interfaces. Poynton is a video and color imaging guru who speaks with a great deal of authority about such matters. I contacted him by e-mail and asked him to comment on Dr. Soneira's notion that our TVs at home ought to emulate the 2.2 gamma of studio monitors.

The issue is one that, after all, boils down to the end-to-end power of the video delivery system. When gamma correction in the video source — a camera or film scanner — uses an exponent whose value is effectively 0.45, which is close to 1/2.2, to deliver a video signal to a home TV whose gamma is 2.5, the end-to-end power is 0.45 x 2.5, or 1.25. That tends to provide optimal results when the image is viewed in a dim-but-not-pitch-black environment, says Poynton's book.

But images that are viewed in total darkness ought to have 1.5 as their end-to-end power, Poynton says. If the original gamma-correction exponent is changed from 0.45 to 0.6, a gamma-2.5 TV yields that end-to-end power. (If the gamma correction stays the same, adjusting the TV's gamma to 3.33 would, my calculator tells me, have the same effect. But how many TVs can be adjusted to 3.33 gamma?)

So the effective gamma-correction exponent is crucial to rendering intent. But here's where the studio monitor, used by "the creative people involved in making a program [to] approve their final result," as Mr. Poynton so succinctly puts it in his e-mail reply to me, makes its crucial appearance.

The studio monitor has, of course, its own gamma exponent, which determines how much image contrast there is in movie scenes that are rendered on its screen. If the "creative people" in, say, a DVD post-production facility don't see enough contrast, they can in effect raise the original film-to-video scanner's gamma-correction exponent to provide a more contrasty result on the eventual DVD.

But what happens when the post house monitor has, say, 2.2 gamma and your TV's is fully 2.5? The end-to-end power of the video delivery system as a whole thereby rises above what it would otherwise be, right?

That's not necessarily bad, mind you. Remember the example I gave above, in which hiking the gamma-correction exponent from 0.45 to 0.6 made for an ideal image as viewed in a totally dark viewing environment on a gamma-2.5 TV? It suggests that "creative people" in post houses, who use (according to Dr. Soneira) gamma-2.2 studio monitors to tweak images as they are being viewed in the post facility under subdued lighting conditions, wind up producing just the right amount of image contrast for DVDs watched in pitch-dark home theaters whose displays have gamma figures notably higher than 2.2. True?

Well, maybe. Mr. Poynton now says, in his kind e-mail response to me, "Current practice — as far as I can determine, after a decade or more of work — is that studio monitors have 2.4 gamma" (!). He also suggests that he now finds 2.4 to be a "more realistic" estimate of the inherent gamma of a CRT, a fact of life upon which the need for gamma correction was originally based. Studio monitors, of course, are usually CRTs.

Meanwhile, owing to what are perhaps misinterpretations by TV makers of official standards for video-production engineers and television studios, consumer TVs may be getting built-in gamma exponents less than 2.4. Poynton:

Rec. 709 [the broadcast standard for modern digital HDTV] standardizes the factory setting of a camera's gamma correction, but fails to mention viewing rendering, and misleadingly includes an inverse (code-to-light) function. Inclusion of the inverse function suggests its use in a monitor, but actually the function would yield scene-referred values, not display-referred (rendered) values. And no video textbook — save mine — even mentions the issue! It's a mess.

I think that by "suggests its use in a monitor" he means, here, that Rec. 709 specifies an inverse function whose gamma-correction exponent amounts to an "advertised power" of 0.45, which is roughly equal to 1/2.2. "Advertised power," in Poynton's terms, refers to the fact that "taking into account the scaling and offset required to include the linear segment [at the lower end of the curve, the effective exponent] is effectively 0.51." But that's not the key thing here.

The key thing is, rather, that all manner of people, TV makers included, see that advertised exponent of roughly 1/2.2 or 0.45 codified in the Rec. 709 standard and think consumer HDTVs ought consequently to have a 2.2 gamma exponent.

So even CRT-based consumer HDTVs (what few of them there are) may be using digital signal processing (DSP) to change what would otherwise be an inherent 2.4 gamma to 2.2! And makers of non-CRT displays — plasmas, LCDs, etc. — are following suit. Their (DSP-imposed) gamma figures are basically the same as modern CRTs': 2.2, or thereabouts.

Mr. Poynton brings up another subtle but interesting point in his e-mail to me:

Rec. 709 has an advertised power of 0.45 but taking into account the scaling and offset required to include the linear segment [in the region at the lower end of the curve, near black] it is effectively 0.51. For 2.4-power display, end-to-end power is about 1.2. That's appropriate for a daylight scene with diffuse white at about 30,000 [cd/m2]. Viewing rendering needs to be reduced if the scene is shot at candlelight: End-to-end power should then drop to about 1.1, requiring effective 0.46 at the camera (requiring reducing advertised gamma to about 0.40).My interpretation: in a brightly lit daylight scene, sunlight will reflect off a white sheet of paper with a luminance of about 30,000 candelas per square meter, or 30,000 cd/m2. A televised image of that scene needs an end-to-end power of about 1.2 when viewed (I assume) in dimly lit but not pitch-black environs.

But a scene shot in candlelight will have a maximum luminance lower than that by several orders of magnitude. The TV screen's output luminance will now be able to nearly match that of the original scene. Since one of the main reasons for using an end-to-end power well greater than 1.0 has disappeared for this particular scene, end-to-end power "should then drop to about 1.1."

Since the TV cannot be expected to make that gamma adjustment on the fly, the effective power or exponent of the camera (or film scanner or post-house tweaking station) ought to drop to 046. In view of the difference between effective power and advertised power, the latter ought to be reduced to 0.40 for a candlelit scene.

I admit that all this stuff about gamma may seem like material for a Ph.D. thesis. Again, why should we care?

The main reason is that the best front- and rear-projection HDTVs today, properly adjusted and calibrated and with excellently produced video source material, can produce images that are stunningly film-like. Flat-panel displays are not far behind. We are very close to home-theater nirvana.

Achieving proper end-to-end power figures will put us even closer.

It looks to me as if that holy grail requires co-operation between program producers such as home-video post houses and HDTV makers. The people responsible for scanning films and tweaking the image for consumer DVDs have some latitude to adjust their effective gamma-correction exponents on a scene-by-scene basis, as Mr. Poynton suggests. But because gamma correction has to stick to providing a digital signal that can be encoded without artifacts in just 8 bits per color per pixel, that latitude is limited.

So it seems to me that out HDTVs need gamma adjustment capabilities.

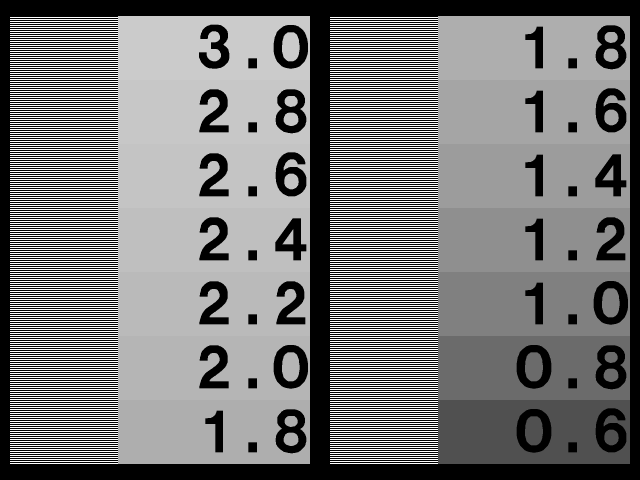

HDTVs and front projectors with the ability for the end user to tailor gamma already exist, of course. As far as I can tell, gamma adjustment is even becoming a common feature on pricey high-end HDTVs. With these TVs, users are able to change the gamma setting to take into account such things as how dark or light their viewing environment is and what their own preferences are concerning image contrast. Users can also change gamma for DVDs or other video sources they feel have been rendered too dark or too light.

Those end-user gamma adjustments ought now to enter the mainstream. And they ought to be implemented in such a way as to tell the user exactly which gamma exponent (2.2? 2.4?) the TV is using.

In fact, it would be nice if TV makers would include in their TVs' firmware test patterns and software routines similar to SuperCal by which to measure and calibrate gamma. For that matter, why not include calibrating patterns for black level, white level, hue and saturation, etc.?